Project: ROS2 Computer Vision Robotic System

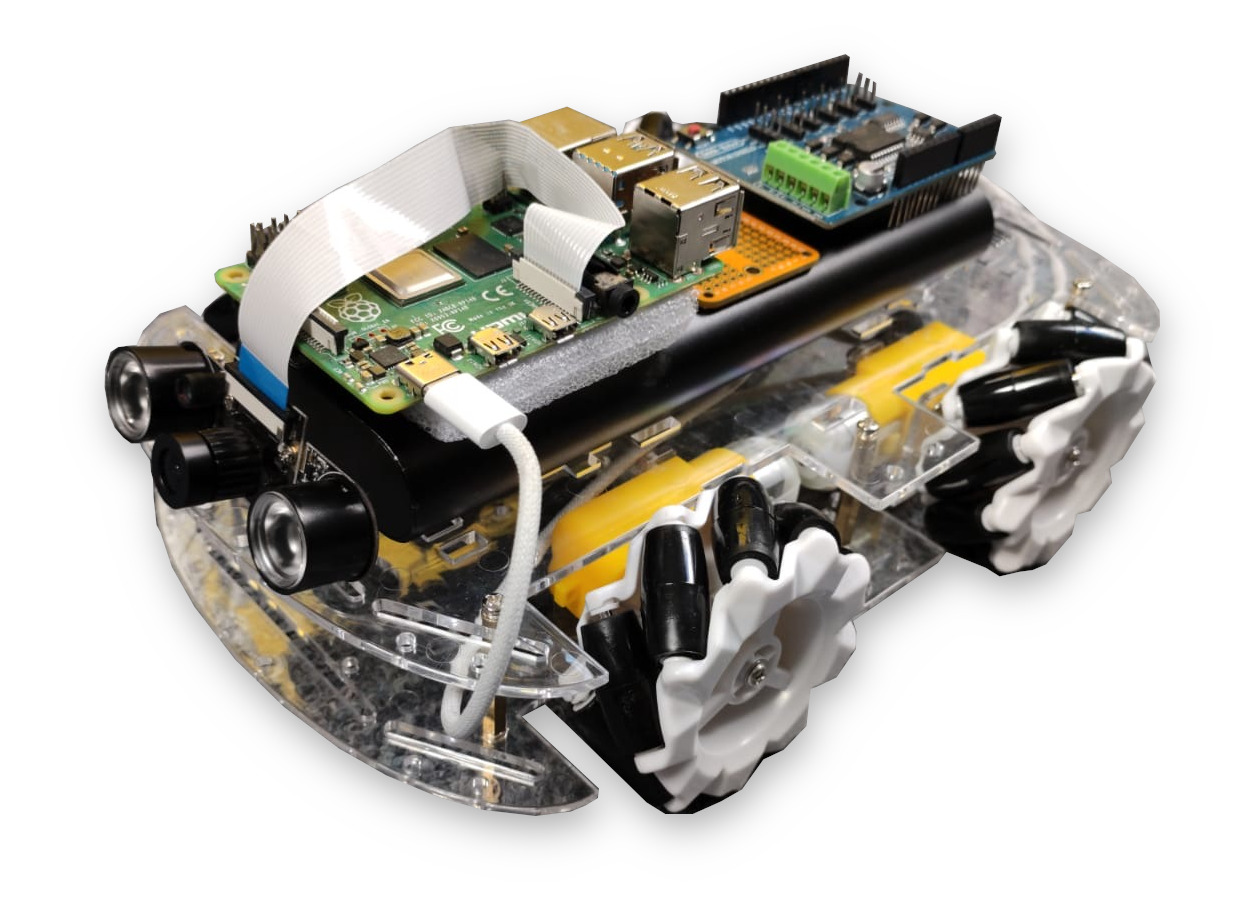

This project centers around the development of a cutting-edge robotic system leveraging ROS2 to integrate computer vision, object recognition, and autonomous navigation. Built using a Raspberry Pi running Linux Server 22.04, the robot employs advanced algorithms to perform tasks with high accuracy and precision, making it an ideal solution for dynamic, real-world environments.

Project Description

In this project, I designed and implemented a comprehensive robotic system aimed at demonstrating autonomous behavior through the fusion of computer vision and control algorithms. The core functionality revolves around object recognition and pose detection, allowing the robot to identify and track items in real time. Currently in the process of integrating LiDAR for precise environmental mapping and navigation, utilising SLAM (Simultaneous Localisation and Mapping) to ensure accurate loop closures. Mecanum wheels paired with rotary encoders enable omnidirectional movement, providing the robot with a high degree of maneuverability in confined and complex environments. This project was developed with a focus on solving real-world challenges and optimising robotic navigation for soft robotics applications, part of my master’s research.

Key Features

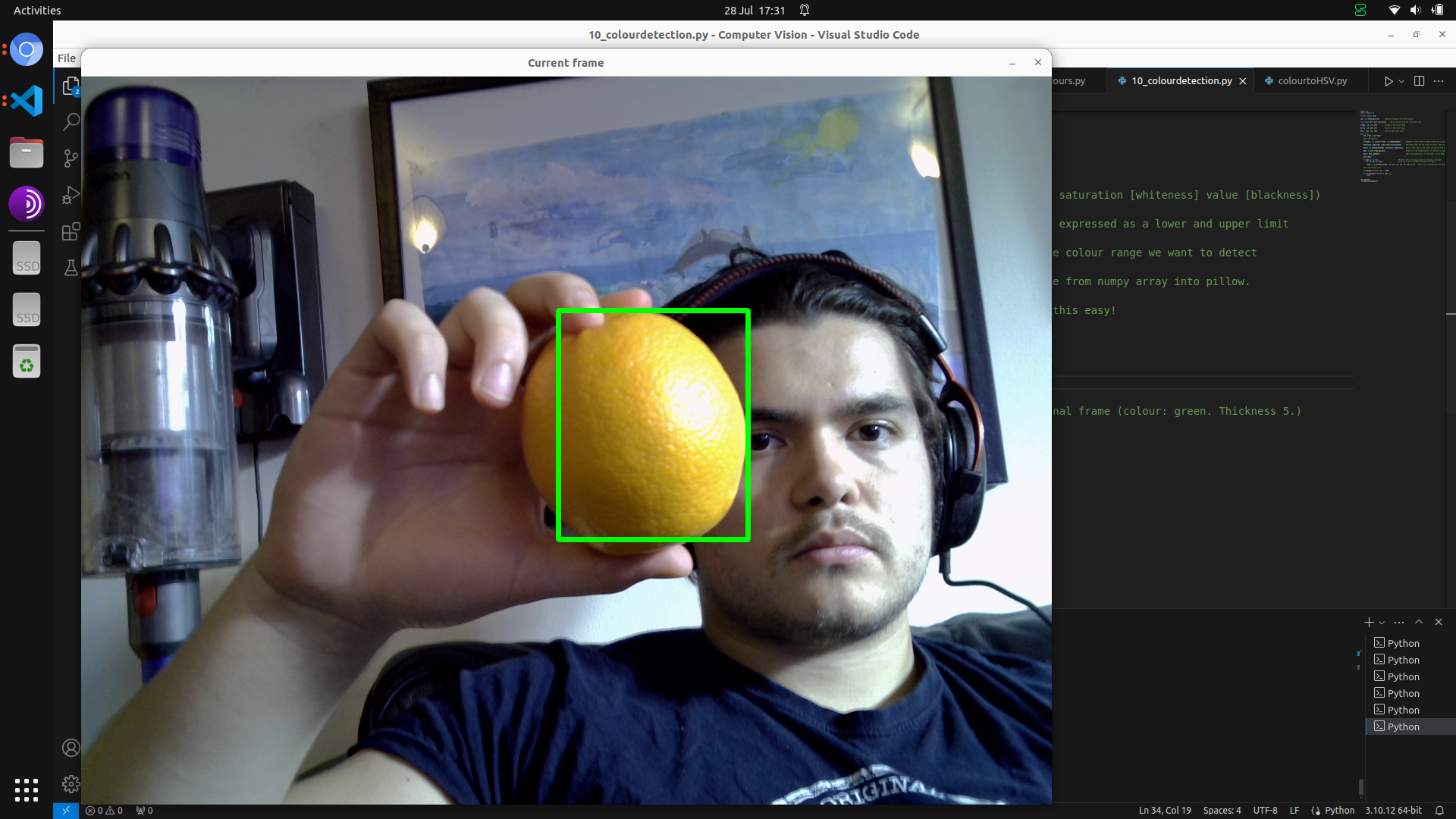

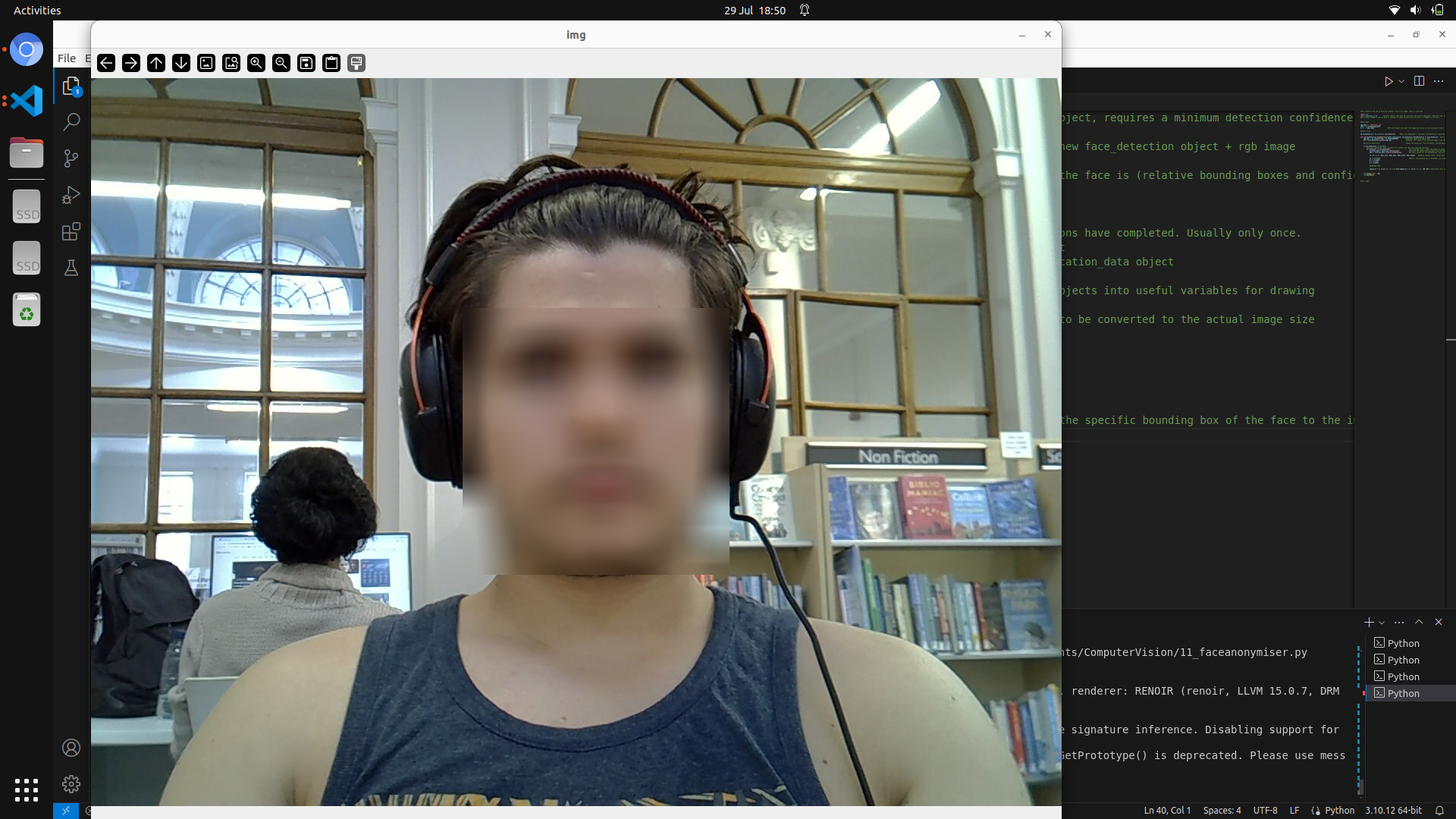

- Computer Vision with Object Recognition and Pose Detection: Utilised OpenCV and YOLOv8 to enable accurate detection and tracking of objects in various lighting and environmental conditions.

- Autonomous Navigation: Integrated ROS2 with SLAM algorithms to enable real-time path planning and localisation using LiDAR data.

- Omnidirectional Mobility: Designed a robotic base equipped with mecanum wheels, allowing the robot to move effortlessly in all directions, crucial for navigating tight spaces.

- Multi-Camera System: Implemented support for various cameras to test and validate the robustness of the computer vision algorithms across different hardware setups.

- Seamless Python and C++ Integration: Leveraged ROS2's capabilities to fuse Python (for computer vision and high-level control) with C++ (for low-level control and SLAM integration).

Technologies Used

The following technologies and tools were employed to bring this project to life:

- Python: Primary language for implementing computer vision algorithms and high-level robotic control.

- C++: Used for performance-critical tasks like SLAM and real-time control of the robotic base.

- ROS2: The middleware that facilitates communication between different components, ensuring seamless integration between Python and C++ nodes.

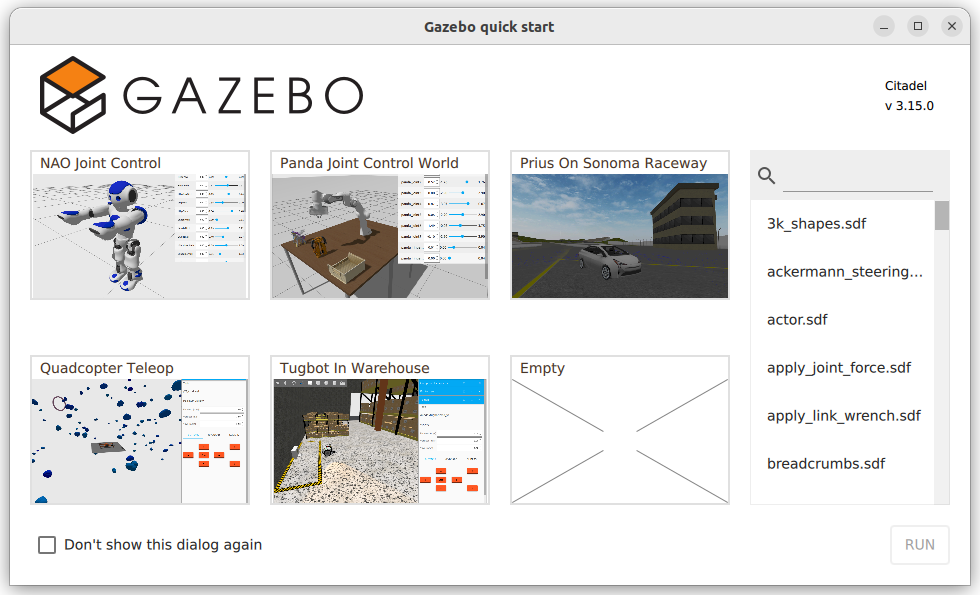

- Gazebo: Utilised for simulating the robot's environment and testing navigation algorithms before deployment on hardware.

- OpenCV: A crucial tool for implementing pose detection and object recognition tasks.

- YOLOv8: State-of-the-art object detection algorithm, enabling real-time identification and localisation of objects in dynamic environments.

- LiDAR: Provides precise environmental mapping, allowing the robot to navigate complex surroundings.

- Linux Server 22.04: The operating system used on the Raspberry Pi for deploying and running the full stack of robotic software.

- Raspberry Pi: The main computational platform for running the system, chosen for its versatility and compatibility with ROS2.

- SLAM: Used to map and localise the robot in real-time, enabling autonomous navigation in unfamiliar environments.

Project Gallery

Here are some images showcasing the various aspects of the project:

Demonstration

Watch a video of the pose recognition software detect crucial keypoints:

Current Software Developments

Keep up to date with current software developments to this project on GitHub: